UX Evals

Evaluate AI through real user experience with Outset UX evals

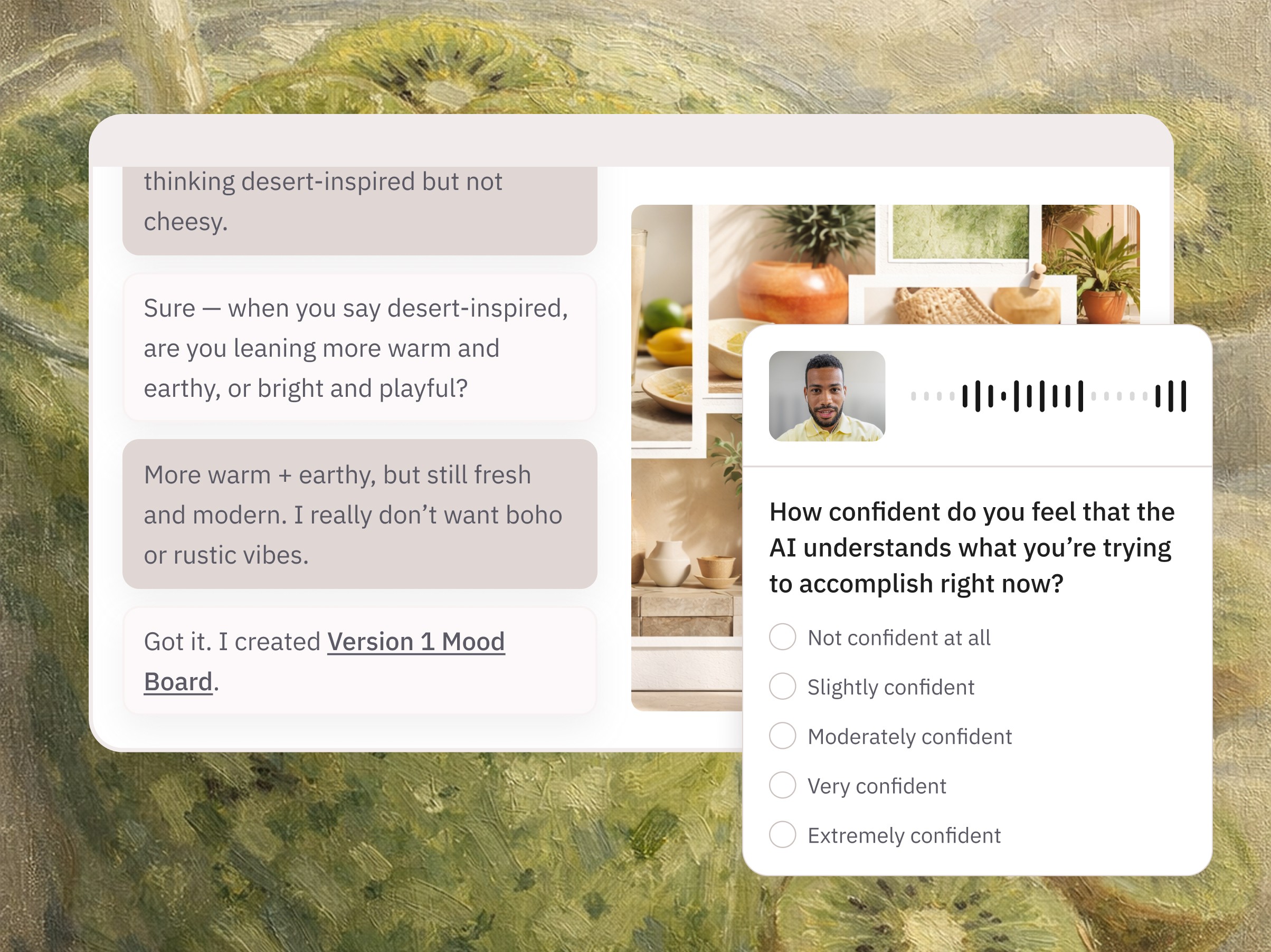

Taking traditional usability into the world of tokens and non-deterministic outcomes to understand real user experience.

Ideated by Microsoft's Copilot team, powered by Outset.

Use UX Evals to understand AI experiences — not just outputs.

The advantage of UX evals

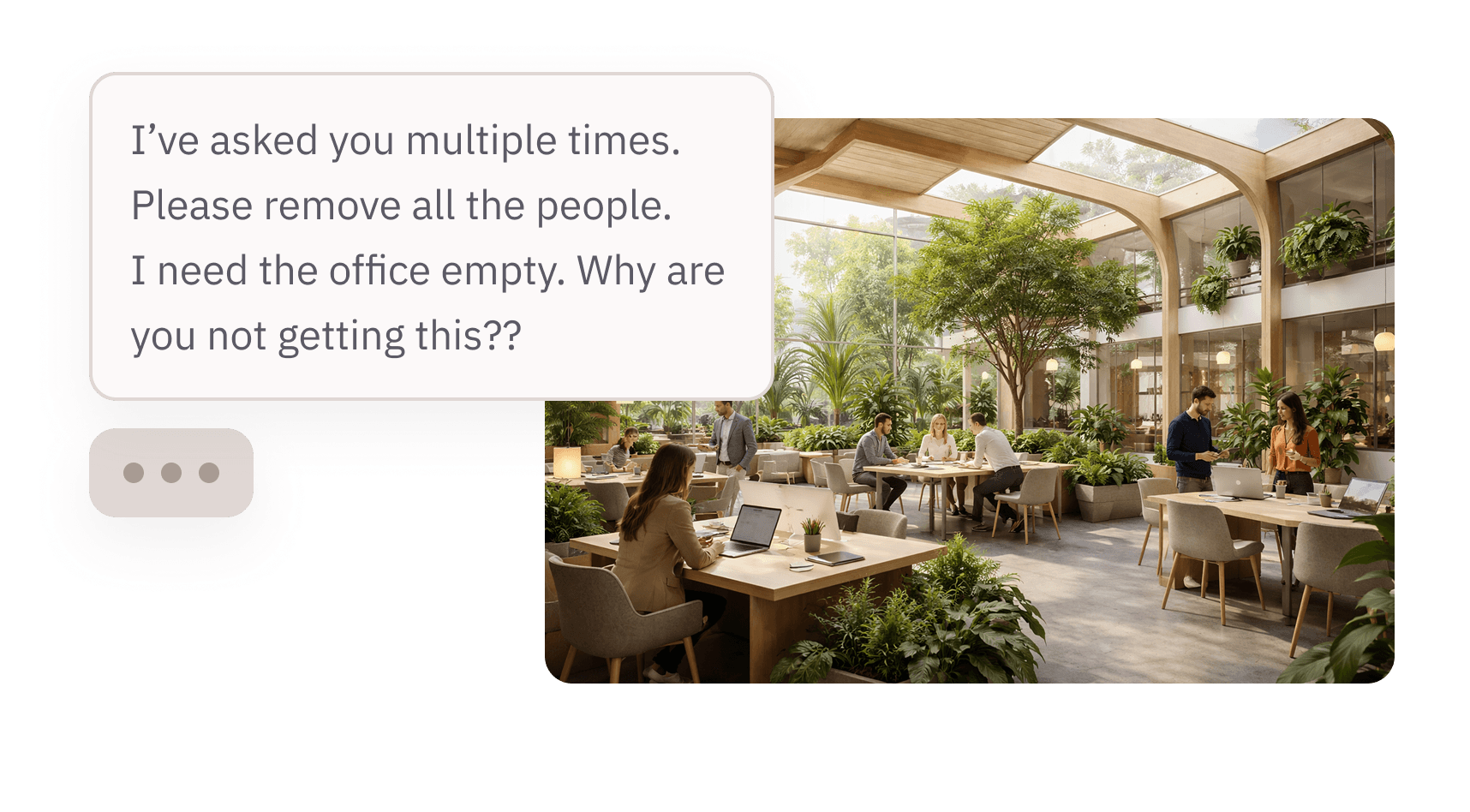

As products move from pixels to tokens, every user’s experience is highly unique to them.

AI evals and traditional usability fall short of deriving insights from first-person, multi-modal, multi-turn interactions.

UX evals ground evaluation in how AI is actually experienced by users, across real conversations and real contexts.

UX evals are built for real-world AI use

Why UX evals go beyond traditional approaches

Beyond traditional AI evals

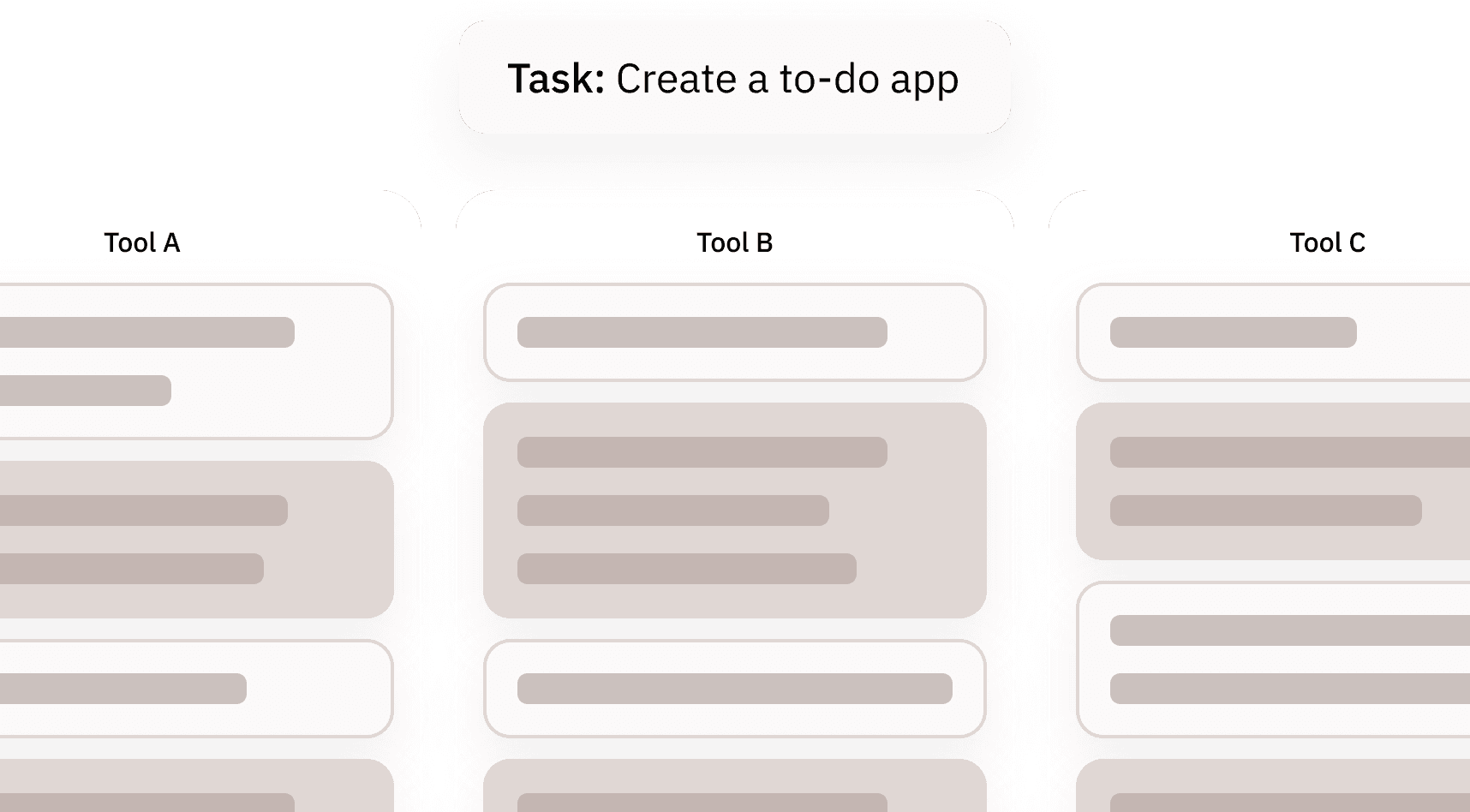

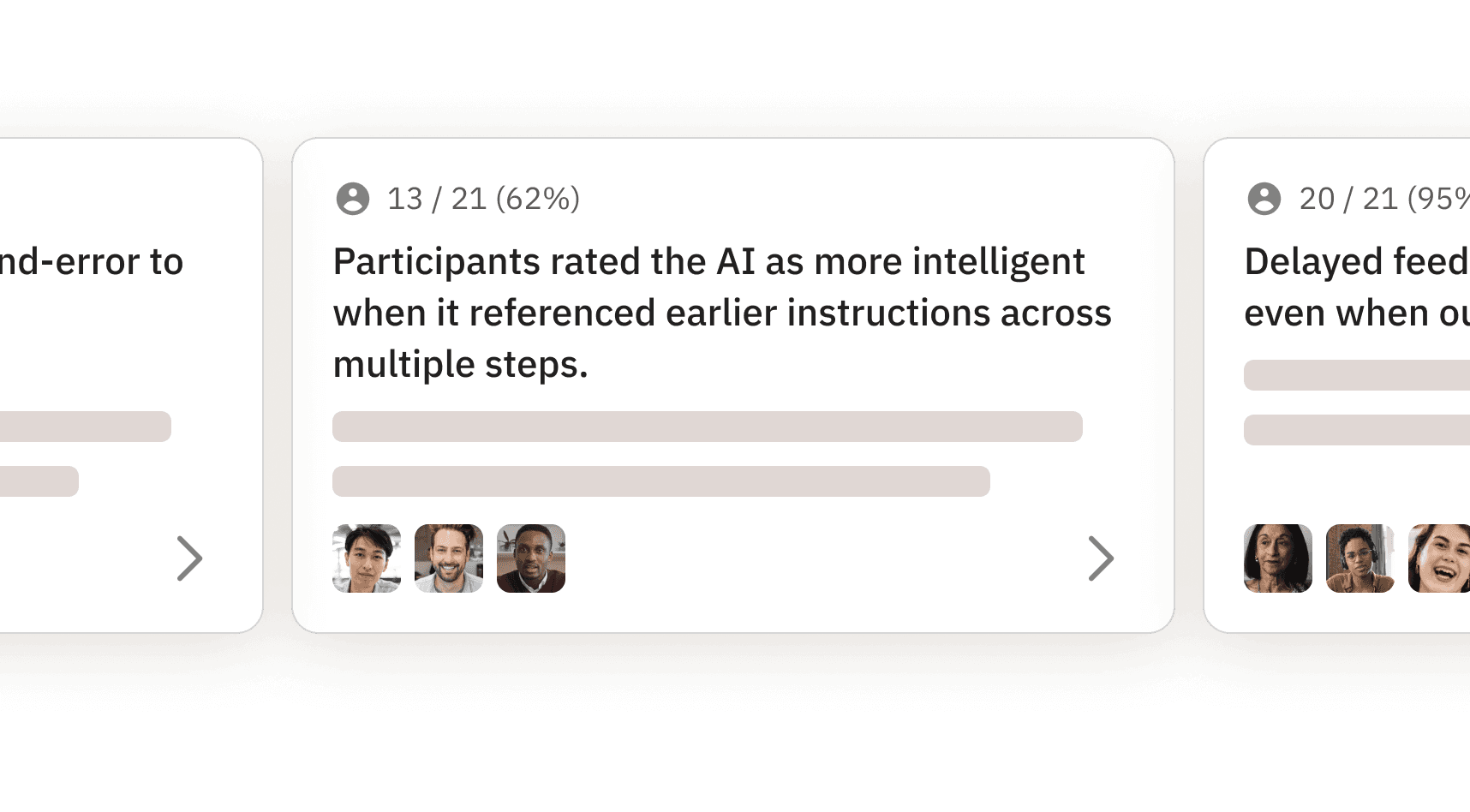

Machine evals and human graders test whether AI works against predefined criteria. UX Evals test whether users actually prefer and value the AI’s experience — as judged by the user.

Beyond usability testing

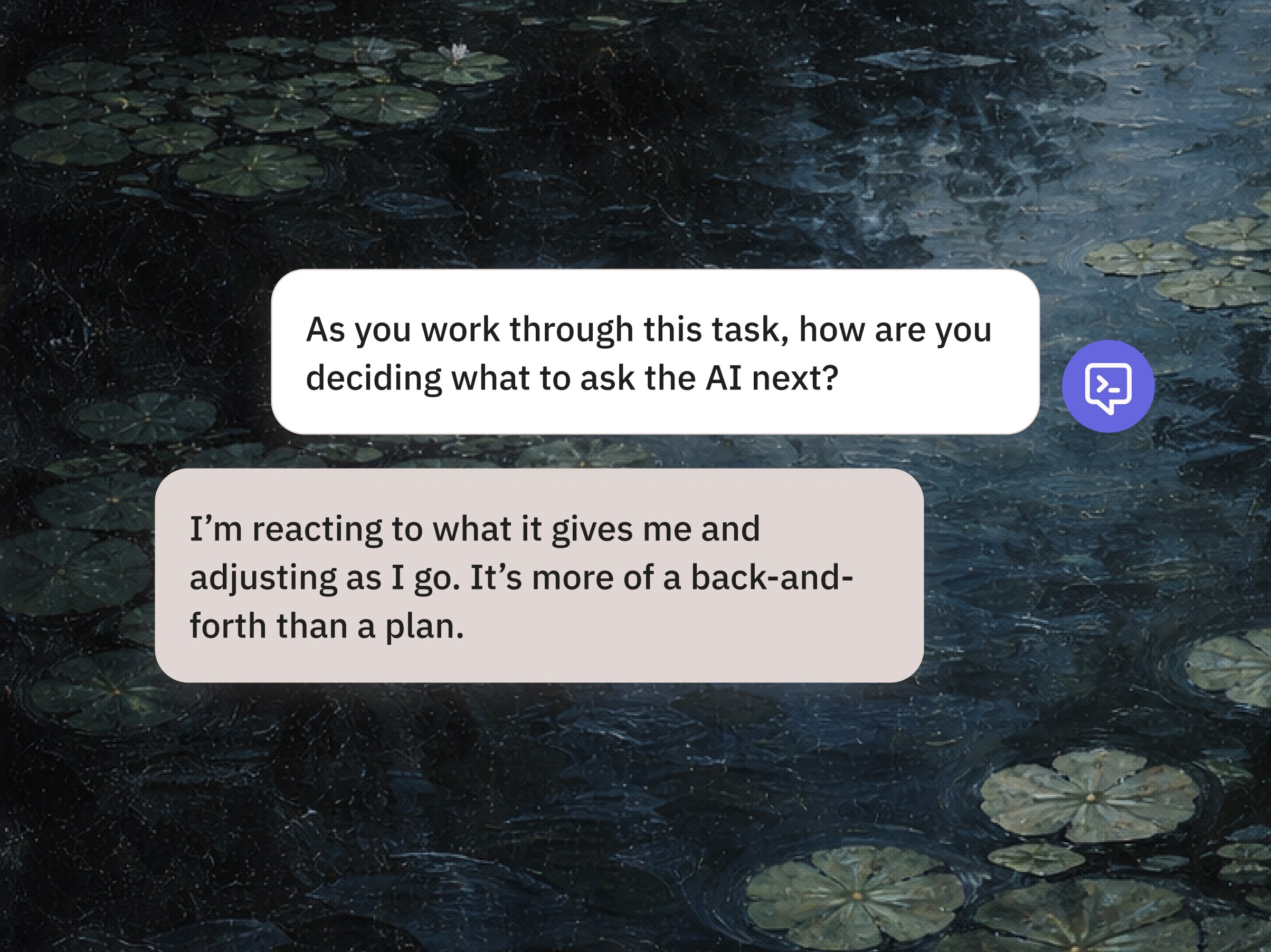

Usability testing was built for static pixels and flows. UX Evals are built for conversations — where outcomes are non-deterministic and value is subjective. You don’t “use” AI. You collaborate with it.

Resources for researchers running UX evals

White paper

Introducing UX Evals

The “why” behind the net-new methodology, written by the Microsoft team that developed it.

Jan 22, 2026

—

Christopher Monnier

Guide

Outset UX Evals: A How To Guide

A step-by-step resource for researchers looking to adopt.

Jan 22, 2026

—

Christopher Monnier

Event

From Pixels to Tokens: A UX Evals Workshop

A tangible workshop hosted by the team that developed this methodology at Microsoft Copilot on how to implement. RSVP now.

Feb 4, 2025 • 12-1pm PST (Virtual)